When we perform massive migrations or very large refactorings, how can we ensure that our service keeps running as it should?

In this post, we will talk about shadow testing

Contents

1 - What is shadow testing?

Shadow testing is a technique that helps us be more confident when making changes as we go to production.

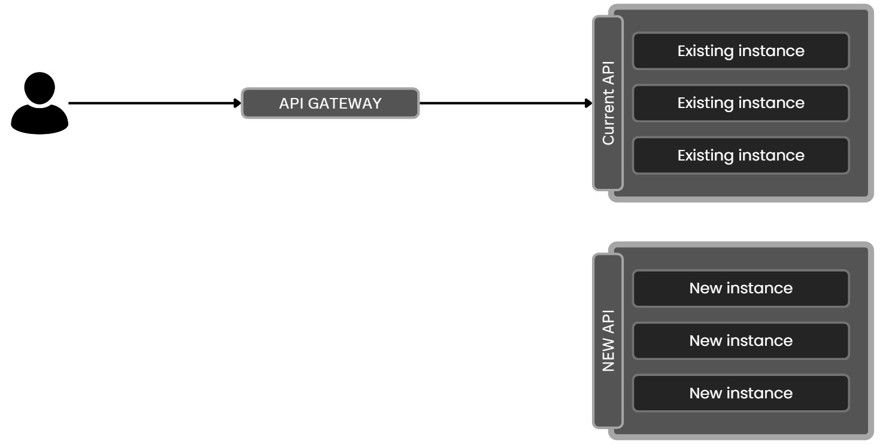

The idea behind this technique is simple: when you make a change and deploy it, you don't replace the running application, instead, it runs in parallel.

Why do this?

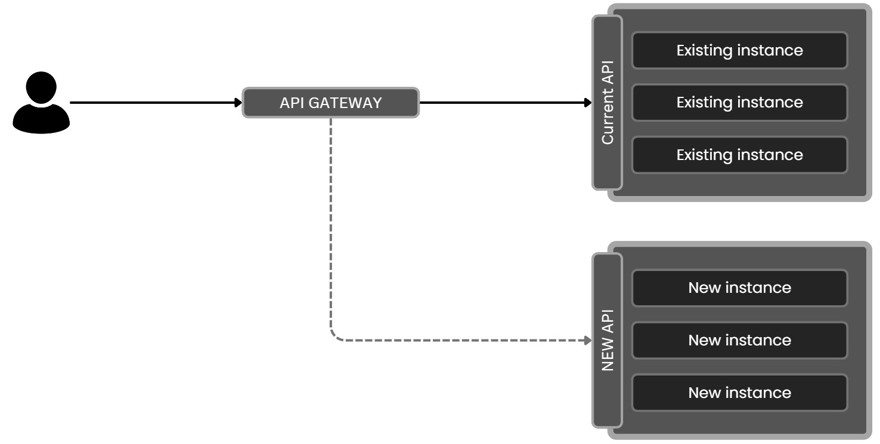

What we will do is, every request that goes to our production service will also be sent to our new service:

This way, we can compare that both service responses are the same and ensure that the change doesn't break any of our clients.

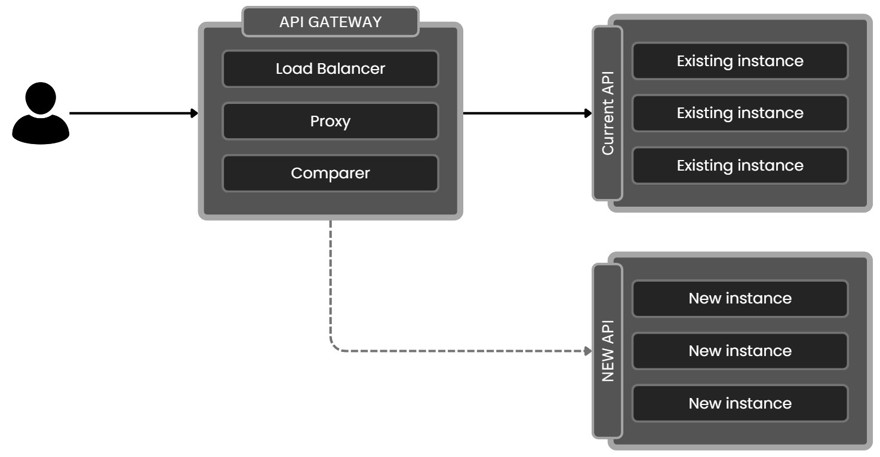

There are different techniques and locations where this can be done, but if you have a service provider like AWS you can set it up in CloudFront so that requests to a certain URL are also sent to another one and then you can compare the results (you can even place code in CloudFront).

If you use your own API Gateway like YARP or Ocelot, you can always write the code manually to perform this comparison.

In any case, how you implement it or what technology you use matters less than the concept itself.

What we have to do is check that the responses are identical, have monitoring and logs because we'll need them. There's no point in getting an alert that the responses are not identical, we also need to know why, and ideally, we need the user's request to replicate the scenario.

2 - When to implement shadow testing?

Ideally, we would think always, at least for a little while when you deploy an application to production, but the reality is it's easier said (and explained) than done.

Implementing shadow testing has very high costs because at first glance, you're doubling the number of requests to a service. But what if that service calls another which in turn calls another, and one or both of those are also being deployed? The number of resources needed in the system grows exponentially, including database resources.

So it is quite expensive. If you run it for 10 minutes during off-peak times, you may not notice, but if for any reason you run shadow testing on every deployment, you definitely will notice it.

Besides the monetary infrastructure cost, there's the cost of maintenance and development, since all that logic needs to be written and maintained, which is not easy.

Still, there are situations where it can save us from future problems.

A - Migrations: When you migrate an API from one system to another, or from one language to another, or even between versions of the same language, for example, migrating an app from NET Framework to the latest version of .NET, if the app's logic is even a bit complex, you should follow the same practices as if you were migrating an app from Ruby to Go. That means, running both apps in parallel, comparing results for a few minutes/hours/days, if all goes well, do the final deployment.

B - Refactorings: In the real world, rarely does an app get refactored unless a dependency changes or a process demands a big change. In those scenarios, when you change a large portion of code, it's also ideal to be sure. While you'll have your unit and integration tests, those kinds of tests rarely catch the "edge cases." So, having both apps running in parallel and comparing results can save us from problems and headaches.

3 - Combining Shadow Testing with Other Techniques

Ideally, you shouldn't just do shadow testing, but use this technique as part of the toolset you use to verify changes in production.

Just because you have shadow testing in your system doesn’t mean you should eliminate unit or integration tests, since those tests will catch errors or issues long before shadow testing would.

The same applies to having multiple environments (dev/staging/prod): just because you do shadow testing and other techniques doesn't mean you should stop using those environments.

Ideally, the shadow testing technique should be completely transparent to the developer and the whole process should be fully automated. For example, I have used it together with canary deployments. This means you deploy and run both versions in parallel and, after a while, gradually remove instances of the old version, replacing them with the new ones.