What began as simple autocompletion is evolving to unprecedented levels. Since my last AI-related content was so well received, I’ve decided to expand on a topic I’m frequently asked about: comparisons between different AIs when working professionally as a developer.

Table of Contents

Note: This post is current as of July 2025; things may change in the future, and they likely will.

1- Problem Scenario Description

We’ll tackle a single technical task designed to replicate a real-life work scenario.

In this case, I’ll ask for the implementation of the outbox pattern into a specific microservice within my Distribt project, which is a showcase of distributed systems for those not familiar with it.

All AIs get the same initial prompt, and from there, we’ll see where the experiment takes us.

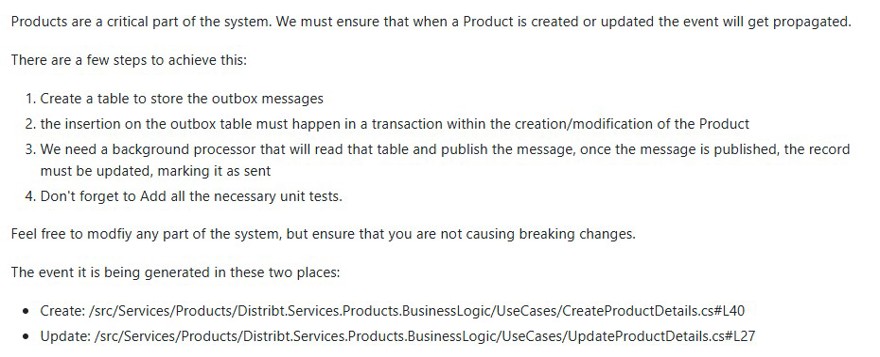

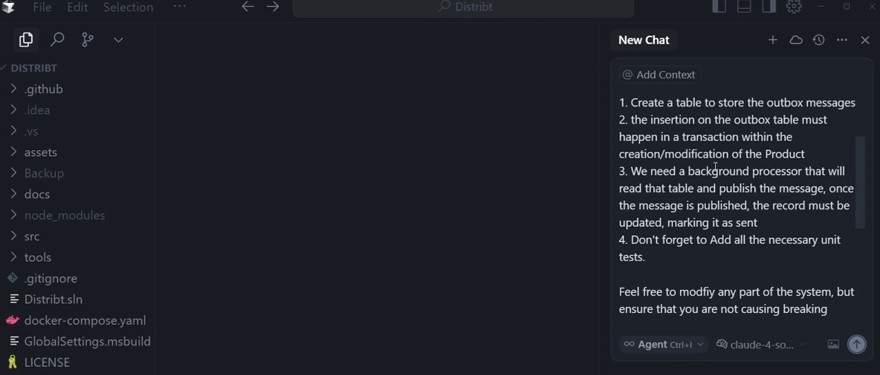

The initial task/prompt is as follows:

This is this GitHub issue, and you can see ALL the results in the linked PRs, both in this post and in the issue itself.

The Spanish translation is the following:

Los productos son una parte fundamental del sistema. Debemos asegurarnos de que, cuando se cree o actualice un Producto, el evento se propague correctamente.Hay varios pasos para lograrlo:- Crear una tabla para almacenar los mensajes de la outbox.- La inserción en la tabla de outbox debe ocurrir dentro de la misma transacción que la creación o modificación del Producto.- Necesitamos un procesador en segundo plano que lea esa tabla y publique el mensaje; una vez publicado, el registro debe actualizarse marcándolo como enviado.- No olvides añadir pruebas unitarias.Siéntete libre de modificar cualquier parte del sistema, pero asegúrate de no provocar cambios incompatibles.El evento se genera en estos dos lugares:Creación: https://github.com/ElectNewt/Distribt/blob/main/src/Services/Products/Distribt.Services.Products.BusinessLogic/UseCases/CreateProductDetails.cs#L40Actualización: https://github.com/ElectNewt/Distribt/blob/main/src/Services/Products/Distribt.Services.Products.BusinessLogic/UseCases/UpdateProductDetails.cs#L27We can argue that this is a very well-written ticket and what I’m asking is clear, but that’s the reality of making requests to AI—you need to be concise with your requirements.

1.1 - Evaluation Criteria

To evaluate the different AIs, I’ll use very simple but, in my view, essential criteria.

- Functional correctness (0-20)

- Fulfills requirements (0-10)

- Maintainable (0-10)

- Easy to understand and update (0-5)

- Unit test evaluation (0-2)

- Consistency in standards and style (0-3)

- Points deducted for terrible efficiency issues (not expected here).

- Points deducted for security problems (not expected here).

- Development experience (0-10)

- Delivery speed (0-6)

- Interactions needed (0-4)

As you can see, each section has a different weight in the evaluation. I believe it’s quite fair.

I'm not comparing the number of tokens or resource consumption because they're all fixed-cost subscriptions, so it doesn't matter. In a business context, it doesn’t matter if it costs $20 or $200—if it boosts efficiency by 20/30%, it’s worth it.

2 - Evaluation of Code Assistants for Technical Tasks

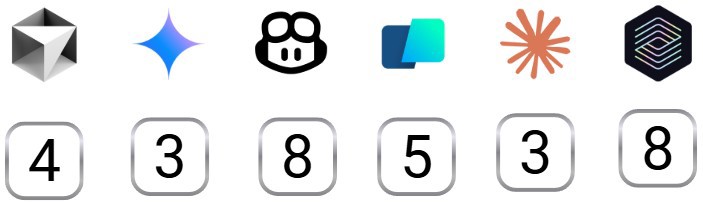

I selected the following code assistants for the task:

- Codex

- Copilot (within GitHub)

- Cursor

- Claude CLI

- Warp Terminal

- Gemini code assist

Why? Because they’re the most popular and common. I also intentionally excluded others like Grok, because those are just web chats, not code assistants, even if they can program well.

In summary: If I have to manually paste code, I don’t want it.

Before starting with the evaluations, since I don't want this post to be infinite scrolling, I won't include each step. I'll provide an explanation of each assistant during the evaluation; remember that each section is linked to a PR with the changes each assistant made.

If you need more context, you can find it in the linked video.

I also set a 45-minute limit, to see if they really help.

2.1 - Codex as a Code Assistant, Evaluation

Let's start with one I’ve worked with before, as seen in this post.

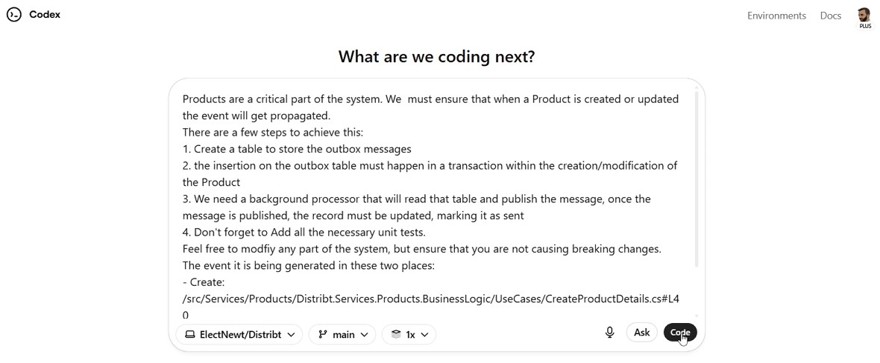

Codex is included in the ChatGPT Plus subscription ($20), and for setup, you simply grant access to your GitHub. Everything is via its interface and is very straightforward—once inside, you have a chat to assign tasks.

Once you send it, it starts working.

Code -> https://github.com/ElectNewt/Distribt/pull/47

NOTE: Codex has a CLI I could have used, but it's experimental for Windows, and I personally prefer the web interface.

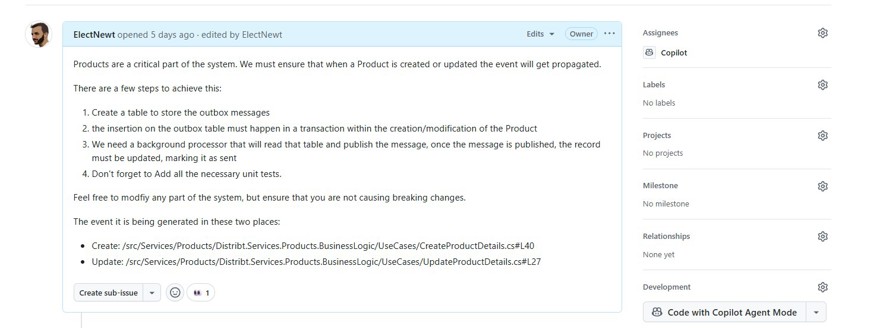

2.2 - Copilot as a Code Assistant

Many of you know Copilot as the assistant inside an IDE; it's likely the oldest of all assistants and has been used for years.

It works well for me; there’s a mode that operates on code as you type, and a chat mode that’s often better than searching for a solution online.

But that’s not what we’re testing in this video: Microsoft recently added the possibility to assign tasks to Copilot directly in GitHub for pro and enterprise accounts.

To use it, you simply assign a task to Copilot in GitHub:

And with that, it gets to work.

Code -> https://github.com/ElectNewt/Distribt/pull/48

2.3 - Cursor as a Code Assistant

Since the start of coding AIs and code assistants, new IDEs have emerged to harness their power. The most famous is Cursor, offering an interactive chat for actions. This chat loads multiple language models—in our case, we’ll use Claude.

If you’re interested, the pro version of Cursor costs $20/month, which is what I currently have. The agent I’ll use is Claude 4 Sonet.

Once you set the prompt, it gets to work.

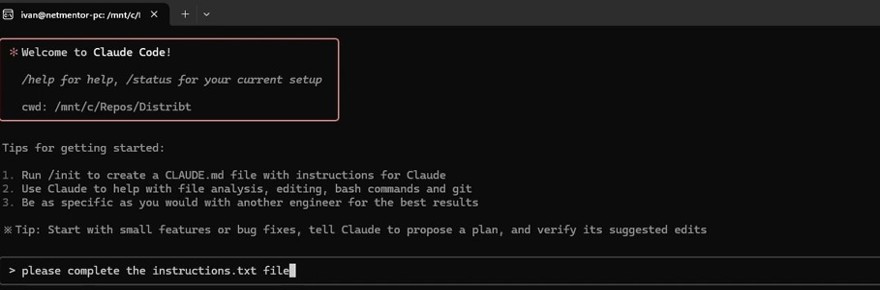

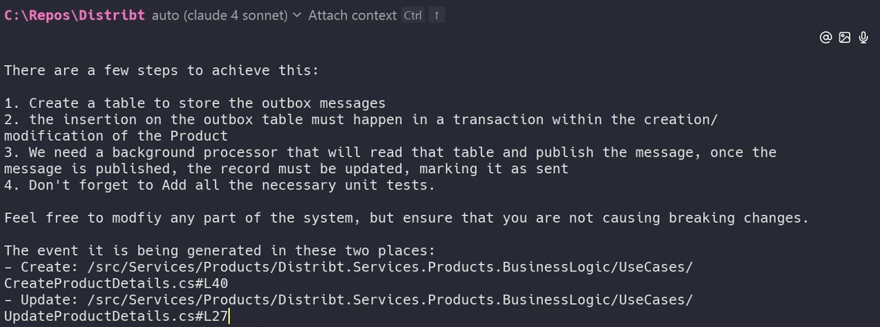

2.4 - Claude Code as a Code Assistant

Anthropic is the company behind Claude, the most popular LLM that always “wins” in programming benchmarks. They recently released a CLI, Claude code, which for $17 lets you use their code assistant and query it.

So our interactions are directly in a terminal.

2.5 - Warp CLI as a Code Assistant

I’ve mentioned it several times on this channel: the most powerful terminal on the market is Warp. With their recent Warp 2 release, they remain top of the line for terminals.

In this terminal, you can enable agent mode where, for example, Claude (though more are supported) can help you complete tasks efficiently. It doesn’t just send prompts to Claude; it has internal implementations (which isn’t the same as using Claude code).

It has a paid version, but for hobby projects, the free version is enough; for professional environments, it’s not. And I have a referral code if you’d like to use it.

With the free version, there was no issue indexing the project.

Code -> https://github.com/ElectNewt/Distribt/compare/ai-test/202507-Baseline...ai-test-202507-Warp?expand=1

2.6 - Gemini Code Assist as a Code Assistant

Lastly, let’s review the most recent code assistant release. This is also a CLI, from Google. For most developers, the free version is enough (with 1000 daily requests); paid gets you only 1500 plus extras not needed for this review.

So we send the prompt and get started.

3 - Evaluation of Results

As mentioned above, if you need to watch the process, it’s available in the video.

3.1 - Development Experience

I’ll start by analyzing the development experience. The task itself is simple, but it does have important aspects such as turning certain actions into a transaction, which makes the AI struggle, resulting in more interactions than necessary.

Here are the evaluation criteria:

- Development experience (0-10)

- Delivery speed (0-6)

- Interactions needed (0-4)

Note: when assessing delivery speed, I also count the time spent reviewing code. It’s pointless for it to be fast if reviewing it takes 10x longer.

Here are the results:

The explanation for each point is below.

3.1.1 - Development Experience with Cursor

Cursor was one of the worst experiences. For every change it tries to implement, it asks for your approval. That’s not bad in itself, but it doesn’t wait for your answer to continue, so it can generate code that doesn’t even compile. If it assumes you’ll accept everything, just make the changes without user input.

In terms of speed, Cursor was very fast making changes, but the main problem was that the changes were wrong, making code review and further iterations take a lot of time. With the time spent reviewing Cursor's code, it is definitely not among the fastest.

Small manual modifications were needed for the code to compile which adds time.

Development experience: 4

- Delivery speed: 3

- Interactions needed: 1

3.1.2 - Development Experience with Gemini

Gemini is a CLI and the experience, overall, is fine—acceptable. The initial prompt does not let you specify intros or a long prompt, though you can work around this with a file of instructions. Not ideal, but a solution.

The speed of iterations was good, very good, and the implementation itself was pretty good. Still, it did things in the code that were not needed for the process, even after being told otherwise in a later prompt. In fact, the final product doesn't work as expected.

Small manual modifications were needed for the code to compile, which adds time.

Development experience: 3

- Delivery speed: 1

- Interactions needed: 2

3.1.3 - Development Experience with GitHub Copilot

Unlike any other AI on the market, GitHub Copilot is directly integrated with GitHub; to get it to work on a task, all you have to do is assign it the ticket.

From there, it works in the background and notifies you when it’s done. It's a little slow compared to other solutions.

The agent itself doesn’t have permissions to run GitHub actions, but internally it runs the tests and ensures everything works. Once done, it creates a pull request that you can review, and any comments you leave will be fixed by the agent.

In this case, I only needed 3 comments; that was the only interaction, along with manually running the build. No other manual code changes were needed.

Development experience: 8

- Delivery speed: 4

- Interactions needed: 4

3.1.4 - Development Experience with Warp

Warp is a terminal; the experience is similar to other console apps. The difference is it doesn’t constantly ask for permission to change code.

Unfortunately, the process was not fast. Here the topic of tests is important. Developing the main logic took 3 minutes, which is nothing, but writing the tests took about 18 minutes because they’re complex. Also, halfway through it timed out (status code 504)—not a huge problem, but it requires user action.

Leaving those unexpected 18 minutes aside, the code needed changes and the second iteration took only 2 minutes (I stopped it because it wanted to write tests). That means the user has to monitor what the AI is doing.

Small manual modifications were needed for the code to compile.

Development experience: 5

- Delivery speed: 3

- Interactions needed: 2

3.1.5 - Development Experience with Claude

Claude is another CLI and also does not allow long prompts. So the solution is to create a file with the prompt. Not ideal, but it works. Claude is by far the fastest and does a decent job at that speed—but not a perfect one.

It requires constant user input for each command, unless you specify that all are accepted once per command.

In one interaction, it created most of the code, but it didn’t compile and there were several mistakes, as well as an extra layer that was unnecessary in my opinion.

On the second iteration, those were fixed, but I found several faults, and in fact, as you saw, there were quite a few interactions—four total, and it asked for more.

Small manual modifications were needed for the code to compile.

Development experience: 3

- Delivery speed: 3

- Interactions needed: 0

3.1.6 - Development Experience with Codex

Back to web interfaces—this time with OpenAI Codex, where you can integrate GitHub repositories. There’s a chat to discuss the project and assign tasks.

With the initial prompt, it returned something very structured and easy to read, needing only minor tweaks which can be specified in the web interface. With that second iteration, everything was good and you can create the PR from the interface.

The only downside is you can't see the diff between Codex iterations. Each time you review the code, you have to go through all of it because you don't know what changed.

It’s fairly fast—first iteration took 13 minutes and the second 4 minutes. No other inputs required besides a couple of comments during the review. All code compiles fine and tests pass.

A speed downside is you have to monitor the website to review, though this is the case for all except GitHub Coding Agent, which emails you.

Development experience: 8

- Delivery speed: 5

- Interactions needed: 3

3.2 - Can Artificial Intelligence Generate Functional Code?

One of the most common questions in IT now is whether AI code is maintainable. That’s what we’ll check now.

I must say, implementations were very similar across them all.

- Functional correctness (0-20)

- Fulfills requirements (0-10)

- Maintainable (0-10)

- Easy to understand and update (0-5)

- Unit test evaluation (0-2)

- Consistency in standards and style (0-3)

Points are deducted for terrible efficiency issues, though I don't expect any here.

Points are deducted for security problems (not expected here).

Fulfilling requirements is straightforward: both update and creation must use an outbox table, regardless of implementation. There must be a background worker, and we must have use-case tests that pass.

3.2.1 - Can Cursor create functional code?

The experience with Cursor was confusing for me. At first, it generated a huge quantity of files, without maintaining any style consistent with what was there.

It created duplicated unused code, placed several README files outside the code that repeat the same ideas, which in my opinion are unnecessary.

It created new classes without removing the old ones, though most failed here. There are a lot of unit tests, but most don’t pass due to the Entity Framework and in-memory database implementation.

Functional correctness: 12

- Fulfills requirements: 8 (missing working unit tests)

- Maintainable: 4

- Easy to understand and update: 3

- Unit test evaluation: 0

- Consistency in standards and style: 1

3.2.2 - Can Gemini create functional code?

At first, Gemini seemed great, but it ended up being a frustrating process because it didn’t understand the instructions. It kept creating a system to publish events with RabbitMQ, even though the application already has one and Gemini couldn't read the context.

It also created two unit tests that aren't run because of transactions—the AI lacks knowledge (others handled this) to solve the problem. For the database, it also created a relationship between the product and outbox tables, which is unnecessary.

Still, it tried to create the outbox system in a shared project. It’s the only AI that attempted this, matching project best practices. \In practice, this doesn't work and requires manual correction.

Functional correctness: 9

- Fulfills requirements: 6 (tests don’t work; incorrect RabbitMQ backgroundworker implementation)

- Maintainable: 3

- Easy to understand and update: 2

- Unit test evaluation: 0

- Consistency in standards and style: 1

3.2.3 - Can GitHub Copilot create functional code?

The answer is resoundingly yes: it was a bit slow, but Copilot agent worked like any developer would. At the end, it puts up the PR. Within GitHub, you can see the different commits and the reasoning process.

Reviewing the code, the implementation is good. It created a new entity for the outbox table and updated the SQL. On the first try, it made a couple of unnecessary things. The background worker is well built and is the only one to separate the logic for the background worker from actual execution.

The tests worked first time—one of the few where this happened. As with Codex, I didn’t need to make any manual code changes.

The only debatable point is that the database layer has a couple of large IF statements to check if the DB is in-memory, which could be refactored for maintainability.

Functional correctness: 19

- Fulfills requirements: 10

- Maintainable: 9

- Easy to understand and update: 4

- Unit test evaluation: 2

- Consistency in standards and style: 3

3.2.4 - Can Warp create functional code?

Warp’s final implementation is good. It updated the use cases to apply transactions inside the use case itself, which is my preferred implementation.

It allowed for mocking the database layer in the tests, making those tests much simpler, though they are very extensive and could be shortened a bit.

It created some things that aren’t strictly necessary, like an interface for the Background processor contract, but the processor implementation is fine. Ideally, it would separate the processor from the logic being executed.

In general, it’s good code.

Functional correctness: 16

- Fulfills requirements: 10

- Maintainable: 6

- Easy to understand and update: 3

- Unit test evaluation: 1

- Consistency in standards and style: 2

3.2.5 - Can Claude create functional code?

Claude's final implementation was quite good, though it required more interactions than other agents. It could also have cleaned up obsolete code, an issue shared by most.

The background worker implementation is correct, but the tests don’t work because of in-memory transaction issues. Maybe with more prompts, it could have been fixed, but Claude used four prompts for this ticket and it’s still not perfect.

Functional correctness: 13

- Fulfills requirements: 8 (test issue)

- Maintainable: 5

- Easy to understand and update: 3

- Unit test evaluation: 0

- Consistency in standards and style: 2

Personally, I was disappointed by Claude; I had high hopes for it.

3.2.6 - Can Codex create functional code?

Before starting the test, I had an idea of what I’d find with Codex—and I wasn’t disappointed. It was a success.

The task implementation is correct; it could delete unused data layer code (something all except Warp failed to do).

Tests were created and work, though all tests are in a single file instead of grouped by class.

The background worker implementation is also correct.

Functional correctness: 17

- Fulfills requirements: 10

- Maintainable: 7

- Easy to understand and update: 5

- Unit test evaluation: 1

- Consistency in standards and style: 2

4 - Final Conclusion

Here are the final evaluations:

I've been working with AI every day in my job, mainly with Copilot, for quite some time—like most reading this, I imagine.

Of all the AIs I tried, they all market themselves as code assistants or solutions for autonomous real programming. In my opinion, only two actually deliver on this: Copilot Agent and Codex. The rest need far more manual input.

What I wanted was for all agents to be able to code mostly independently. While they all technically code, they’re not there yet. Other than Copilot and Codex, only Warp managed to create correct code: tests, SQL file, and compiling without issues.

For the rest—Claude, Gemini, Cursor—either they don’t really understand C# or they don’t really understand the project, which is a simple one.

They’re useful as rubber ducks for asking questions, but nowhere near the level of implementing what Codex or Copilot are doing.

If I had to pay for one only, the question would be whether to pay for Codex (since it comes with ChatGPT) or GitHub Pro (which includes Copilot in the IDE). It’s a matter of evaluating which is more useful, and if you'll use it for more than just coding.

Maybe Warp, if you work a lot in the console or connect to servers, since the AI can also act on your SSH session. But the others I wouldn’t even consider.